Well, it comes down largely to "what might have been", and an almost-nostagia. I'm not sure what it would be called - wish fulfillment, maybe? To see that something widely considered impossible was actually possible after all. To see the machine do something, 40 years later, that it never did before.

Dragon's Lair was released in the summer of 1983 to arcades, and it was unlike anything ever seen before. Most arcade games were still 8-bit systems with custom hardware for every game, low resolution displays with limited colors, small, 4-color sprites, and beeps and boops were still very much the norm. If you were lucky, you got some parallax scrolling (well, if you were lucky enough to scroll).

Then in strides Dirk the Daring, full screen, fully-animated by Don Bluth's new studio (himself fresh out of Disney Animation!), challenging you to help him through beautifully drawn (and often creepy) dungeons and traps to save the Princess Daphne (who is teased to be more than a little attractive) from an enormous and clever dragon. It has also been widely credited with being the first game to cost 50 cents a play rather than 25 cents. (It was the first one I saw.)

The play style was brand new too. The video would play out, and at an appropriate point, you'd be cued to make an input. You had a single joystick and a sword button, and at any given point generally only one input would be correct (some scenes did allow multiple solutions). Get it right, and Dirk would continue. Get it wrong - or respond too slowly - and Dirk would suffer a horrible, sometimes amusing, fully animated death.

Or you could play Nintendo's Mario Bros (which was a very good game, don't get me wrong).

In fact, let's see what else came out in '83, and these are also amazingly good games.

Vector games were state of the art and the best 3D available, and nothing beat the crisp lines, excellent license, and straight-up thrills of Atari's Star Wars:

Big sprites were also starting to happen, and Nintendo's Punch-Out shone in that respect, even if the characters were heavily scaled up to get there (using two monitors to enhance the experience even more - and my screenshot is pretty heavily scaled too):

But, the 3D vector games were sparse (and hidden-line removal was still years off), and the bitmap games were limited by memory to how many frames of animation they could manage (and bigger graphics took more memory, but even Punch Out with its large characters and multiple opponents was less than 300K!)

Again, Dragon's Lair blew all that away. Nearly every frame was unique - very little was repeated. There was a good 20 minutes of video there (I can't find the exact count, but that's based on my captures), and at a quality level that no computer of the day could replicate. How was it possible? Laserdisc.

The Laserdisc was a video format released for movies in 1978, competing largely with VHS video tape (released in 1976). It looked very much like a pizza-sized DVD. Like VHS, it recorded NTSC composite video frames for playback (apologies for ignoring PAL and other formats). However, the laser pickup allowed a much higher bandwidth, roughly doubling the resolution of the stored image. As a result, Laserdisc was the best quality home picture you could get, and it enjoyed fairly good support.

However, it was much more expensive than the tape machines (BetaMax was in there too), and couldn't record, leading to its eventual consideration as a failed product. (Though I don't agree it was, you don't have to be number one to succeed. LaserDisc had a long, solid run with many vendors involved.)

Anyway, back to 1983, and Advanced Microcomputer Systems, the company who had used LaserDisc to create an interactive storybook named The Secrets of the Lost Woods, decided that they could use animation to better sell their idea. Reportedly inspired by The Secret of NIMH, they hired Don Bluth's studio to do the animation. The computer would simply tell the LaserDisc player which frames to play, and since the disc could hold a good 30 minutes of video, the memory cost was minimal. (Of course, the program was still stored in ROM chips - loading code from an analog LaserDisc wasn't something anyone wanted to try. At least not until Sony did it in 1984. But Dragon's Lair's code was miniscule - only 32k.)

Dragon's Lair was certainly the first popular LaserDisc game, but it actually wasn't the first! Sega's Astron Belt had released just a year previously. This was a somewhat confusing game that overlaid a 3rd person space shooter overtop of clips from various sci-fi movies. Every so often you'd shoot the right area and the entire screen would play back an explosion, or you'd be in the wrong place and die. I played it a few times and liked it, but never really understood it.

(It is perhaps interesting to notice how sharp the computer graphics are overtop of the LaserDisc's composite video playing back B-grade sci-fi - but this WAS high quality in 1982.)

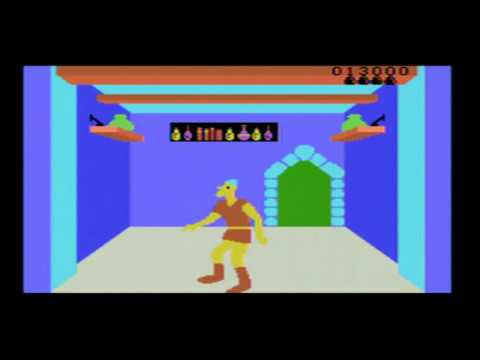

Anyway, the concept of porting Dragon's Lair to the home computers of the time was laughable. The average machine had 32k of memory, 4-16 color graphics, took minutes to load software, and could barely draw a frame of video. However, nobody denied the massive impact of Dragon's Lair - even if later LaserDisc games proved to be far less successful. So people created games inspired by Dragon's Lair, and we ended up with games like this on the Coleco Adam and Commodore 64:

They actually look pretty good, but in fairness these titles looked better on the box than in motion. And yet the base appeal of Dragon's Lair was so strong you wanted to play them ANYWAY. The ultimate of that direction was probably the NES version, which wanted smooth animation above all else, including gameplay:

Coleco, in fairness, actually talked about the REAL Dragon's Lair in the home. The computer requirements of the game were actually pretty small - ANY machine of the day could have handled it. All that would be needed is an interface to a LaserDisc player with a remote control input (and many had one!) But, in the end this didn't happen, since it was considered unlikely that enough households would have a LaserDisc player AND a ColecoVision AND buy the LaserDisc adapter AND want to hook it to their LaserDisc player all for just one game with no expectation of any more. It was probably the right answer, as awesome as it would have been.

We moved quickly to the 16-bit era just a couple of years later, and suddenly motion video wasn't quite as laughable as it used to be. The 16 bit machines had fast disk drives, 512-1024K of memory, and more than enough power to update the entire screen quickly. The Atari ST and Amiga (and even PC, though I never saw that one) notably got diskette-based releases. Sure, lots was redrawn to save memory, more than half the game was missing, and you had to swap disks a lot, but you got the Dragon's Lair experience at home, and in just 4-5MB.

When CD hit gaming, things changed a lot. Suddenly computers and video game systems had access to 640MB of memory - much more than ever before. The PC, CD-I and Sega CD got reasonably complete ports of the direct LaserDisc video game (although the Sega CD version suffers from the Genesis' limited 64-color screen, it's not much worse than the VGA):

This was finally real, and the next advance was the release of DVD, which finally made the full quality video playable -- in some releases even on a standard DVD player, as DVD (with care given to the mastering) can match the quality of the original LaserDisc, or even exceed it. By this point, though, computers and video game systems were all more than capable of playing back the original quality video, digitized and compressed into the favorite format of the day. One advantage of playing it from digital video was that there was no need for black screens between scenes, which on the original arcade was caused by the LaserDisc seeking for the next video clip. A HD-remastered version was released for the HD era, and Dirk had never looked so sharp.

The TI-99/4, my target for this project, was released in 1979. It was updated in 1981 to the version that makes this possible (the 4A), and again in 1983 to piss off Atari by locking them out. It was then cancelled in 1984 because it had no good games. In the base 4A unit, it contains a 16-bit missile control system TMS9900NL running at 3MHz, 256 bytes of RAM, 26K of ROM, a 4 channel tone generator, and a video chip with a 256x192 resolution in 15 colors and its own 16K of video RAM.

Technology has advanced significantly since 1979, and very large memories are now possible. In addition, computers are markedly more capable. My current PC is a relatively obsolete 16-core 64-bit Xeon running at 2.6GHz natively, numerous TB of disk (which essentially takes the place of the TI's ROM), 128GB of RAM, a more-or-less unlimited sound card, and a graphics card that can push 3840x2160 in 32-bit color several times over and has its own 2GB of video RAM

So it is of course my pleasure to put the new machine to work producing output for the older one - in this case, I spent years tuning a brute-force image converter that outputs acceptable images for the TI from nearly any source image (although due to playback performance, the TI is only actually playing at 1/2 its own resolution):

I also found a single-chip 128MB flash which is more than enough to hold the roughly 65MB of output frames. They have to be uncompressed and directly accessible because the TI is simply not fast enough to handle streaming or decompression, it needs to just copy bytes to the video RAM and sound chip as fast as it can. Even at half resolution, as fast as it can only works out to about 9 fps. But the important thing is -- it works out. This means Dragon's Lair is possible.

As so we come back around. Wouldn't it be awesome to see a 'wouldn't it have been awesome if' come true? Dragon's Lair, in a cartridge for the TI-99/4A, just like might have been possible in 1984 (I'm saying 6 months after the arcade came out).

I wanted to do it right, so I have a license from Digital Leisure to produce and release the title. Work has made it difficult to put the pieces in place, and I am running out of time, but it's really close to being real now. Close enough that I'm procrastinating by writing this instead of working on the code. ;) But it's looking really likely.

Which really leaves the final question, and the one that I secretly started this article to talk about. Was it really feasible in 1984? Well, let's look at the challenges I've faced dealing with it today.

First the video. Could we even digitize it? ComputerEyes (Digital Vision) was on the market for the Apple 2 in 1984, although the quality was far below what we wanted. I seem to remember a magazine article of the day that discussed a very cheap video capture circuit - provided you had a stable video signal. LaserDisc could do that (since each video frame had its own track, pauses were crystal clear). It's hard to find much information today about the history of digitizing video, but the concepts were understood well enough and consumer products were beginning to emerge - let's say it would have been tricky but possible. I can say this much - to capture in color would have taken a fair bit of time - even years later capturing actual moving video was still very, very expensive. But capturing stills - there are lots of ways to trade cost for speed. But let's say you could capture a frame and save it off in an average of 3 seconds (which I think is really generous). The playback rate of the TI video resulted in 10,855 frames of the original game. So, capture would take about 9 hours, which is pretty good.

Also pretty unlikely, IMO, since I did a still-frame capture of a LaserDisc in 2000 and it took about 4 hours per 30 minutes on a machine of that era. But let's remain generous - you could definitely have captured the frames.

And by the way, we didn't really have any true color graphics formats of note back then, so we're probably storing as uncompressed TIFF, at about 144k per frame, or 1.5GB of data in the end. That would have cost about $420,000 in 1984 dollars (about $900,000 today) and been impossible to attach anyway. Or maybe we were smart and decided GIF, the 256 color format released by Compuserve in 1987, was going to be the way to go and invented it before we started. Average frame compresses down to about 37k and still has enough data for the conversion. So hard disk space is reduced to 400MB, or only about $112,000.

The final option, and probably the only feasible one, is to convert each image as it's captured. The resulting frames are exactly 6k in size, and while you could compress at this point let's assume not. In that case, the resulting data will take 65MB, for about $18,000. You might even be able to attach it. the PC/AT itself was about $4000, for comparison.

(Storage prices: http://www.mkomo.com/cost-per-gigabyte)

Conversion is a pretty big deal - getting the RGB image dithered into the TI's limitations is a challenge even on my modern machine, which needs 3-5 seconds per frame. But let's start by eliminating all the options and sticking to the simpler dither, and say that in the ideal case my modern machine needs 1s per frame. It's a single-thread conversion, so the cores don't matter, but we're still running at 2.6GHz (over 3GHz, when it's busy). In 1984, PCs were clocking around 6MHz and running the brand-new 80286 processor. It's comparing Apco's to Orange's given the massive internal changes in CPU design, but let's assume clocks to clocks and say my machine is 433 times faster than that machine, meaning each frame would need 433 seconds to be converted. (And the programming wizard who pulls that off will get my hat. The truth is that a single core on this machine is probably a good thousand times faster at a particular task than that AT.)

433 seconds, times 10,855 frames, means that working full-tilt, the AT should complete the conversion in about 55 days. Better put a UPS on that bad boy.

So we've got the development system at about $22,000 (plus whatever hackery we need to get it capturing). You'd also need to buy the arcade game to get the disc and the LaserDisc player, that was about $4000 (although a replacement player and disc was about $1000 - let's go with that number). Now let's talk about the cartridge. (Box and manual has not changed much over time, and I don't care to investigate that right this moment).

I'm using a 128MB flash chip, but back in 1984 every kilobyte counted. Let's restrict the cartridge to the 65MB we actually need (although in truth, almost anyone building such a thing back then would probably discard the roughly 20% of video that makes up the mirrored scenes in order to save memory, or even build hardware flipping support to save that money. As we're about to see, megabytes were expensive. But I'll keep the 65MB number).

It's hard to get actual prices for ROM, but RAM is here: https://jcmit.net/memoryprice.htm. Here we see at the start of 1984 that RAM was about $1300/MB. Numerous hunts for mask ROM (the cheapest, if in quantity) gave no actual numbers, but a number of price quotes suggest a range of 1/2 to 1/5th the cost. Let's go with 1/4 the cost and then we can say about $325/MB. 65MB, then, would cost $21,000 (in quantity). We'd better make sure the manual is really nicely printed.

Of course, with the $21,000 worth of ROM chips you also have the small problem of space, and the slightly larger problem of power. It's going to be pretty big, so it's probably going to plug into the wall of its own accord and sit in a box behind your console while you plug a ribbon cable into the cartridge port. It costs more than your car, so it might as well.

I don't know if I'm allowed to talk about the price I paid for license, probably not, but I can tell you right now that in 1984 Dragon's Lair was the hot property. You're not going to walk away with a cheap license here. I wonder what the 8-bit ports paid? There's at least one quote that suggests Coleco paid as much as two million dollars for it. In 1984. Even Dr Evil couldn't afford that.

So, yes, technically, very technically. You'd need to win the Lottery to afford the license, you'll need about $25,000 in 1984 dollars to assemble the development gear, and two months just to prepare the data (if nothing goes wrong). Your final product will cost over $21,000 to assemble (each) and most households will probably brown out when they plug it in. But.. yes, it could have been done.